- Created by Stephen Fuqua, last modified on Apr 15, 2024

You are viewing an old version of this page. View the current version.

Compare with Current View Page History

« Previous Version 14 Current »

Introduction

Relational databases have robust support for relationship management. Naturally 😉. And document databases do not. This article reviews the steps taken in project Meadowlark to ensure a high level of referential integrity for data submitted to the API.

Scenarios

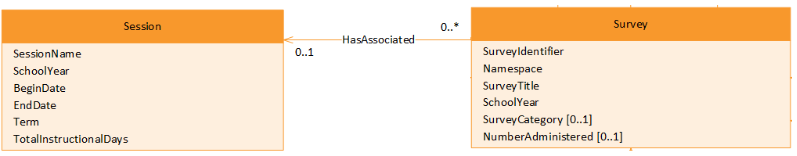

The following diagram is from the Ed-Fi Data Model 3.3 documentation on the Survey Domain, simplified to show a single relationship: a Survey has an associated Session. This will be our exemplar that stands in for many different situations in the Ed-Fi Data Model.

One of the key concepts behind the Ed-Fi system is to ensure a high degree of validity to data. Aside from enforcing some basic type constraints (e.g. not submitting a SchoolYear "202eeee"), the primary validation rule is to ensure that related entities exist and to prevent removing one entity when another entity references it. Within Meadowlark, because of the NoSQL database design, these entities take the form of JSON documents, which are analogous to the records found in a traditional relational database.

Relational databases enforce this reference validation through foreign keys. Document databases do not – at least, not traditionally, or as a general pattern. Thus Meadowlark must have custom code to account for the following situations:

- Create a Survey: does the session exist? No: respond with status 400 bad request and do not save the document. Yes: save the document.

- Update a Survey: does the session exist? No: respond with status 400 bad request and do not save the document. Yes: replace the document.

- Delete a Session: are there any Surveys that reference this session? Yes: respond with status 409 conflict. No: delete the document.

Imagine the following sequence of actions being taken virtually simultaneously by two different API clients:

| one | two |

|---|---|

| begin transaction | |

| delete document A | begin transaction |

if document A exists: save document B else: error | |

| commit | commit |

How do we accomplish this safely?

Referential Integrity Pattern

The basic pattern that Meadowlark employs for reference validation is to convert the identity and reference portions of a document into document ids. Reference validation then consists of simple document id lookups, which are possible even in key/value-like datastores. However, in order to maintain consistency during validation a datastore must support ACID transactions. It must also provide a way to lock documents on reads in a transaction such that Meadowlark upserts, updates and deletes can stay consistent with those referenced documents.

Solutions

DynamoDB

DynamoDB provides ACID transactions. It also provides transaction "condition checks" that are like read locks, but have limitations on what can be checked. It turns out that condition checks are too limited to support consistent delete operations with the Meadowlark pattern. Instead, it would require a more generalized read locking behavior.

There is no built-in solution for this in DynamoDB. There is a java client that introduces pessimistic offline locking support - in other words, a client locks a record by updating a lock column with a unique value. Other clients can't access that record until the lock column is cleared. This might be sufficient to support the Meadowlark pattern. However, the development team is not going to develop the JavaScript code to investigate or support this. This may need to be a full replacement for native DynamoDB transactions, and the performance implications are unclear.

Therefore DynamoDB will be removed from Meadowlark release 0.2.0.

PostgreSQL (using non-relational pattern)

PostgreSQL has built-in mechanisms for explicitly locking a record. These can be used either to prevent a competing DELETE from occurring in the middle of an INSERT or UPDATE transaction, or to prevent an INSERT or UPDATE from occurring during a previously-started DELETE transaction.

The article Selecting for Share and Update in PostgreSQL does a nice job of explaining two of the lock modes: select for share and select for update.

With a plain select statement by client two, the save will proceed... which is NOT what we want to happen. To resolve that, we can append for share nowait at the end of the select statement. This will have the effect of locking document A against updates momentarily, without locking out reads by any other client. If client one has already issued a delete statement, however, then the lock will fail. The nowait keyword tells the database engine to fail immediately, rather than wait for client one's lock to be released.

The following code demonstrates the desired behavior:

const pg = require('pg');

const { exit } = require('process');

const dbConfiguration = {

host: process.env.POSTGRES_HOST ?? 'localhost',

port: Number(process.env.POSTGRES_PORT ?? 5432),

user: process.env.POSTGRES_USER,

password: process.env.POSTGRES_PASSWORD,

database: process.env.MEADOWLARK_DATABASE_NAME ?? 'meadowlark',

};

const parentId = `parent${Math.random() * 100}`;

const referencingDocumentId = `reference${Math.random() * 1000}`;

async function RunTest() {

const clientOne = new pg.Client(dbConfiguration);

await clientOne.connect();

const clientTwo = new pg.Client(dbConfiguration);

await clientTwo.connect();

// Create sample records

const insertParent = `

insert into meadowlark.documents (document_id, document_identity, project_name, resource_name, resource_version, is_descriptor, validated, edfi_doc)

values ('${parentId}', '{}', 'edfi', 'test', '3.3b', False, True, '{}');

`;

await clientOne.query('begin');

await clientOne.query(insertParent);

await clientOne.query('commit');

// Issue a delete statement _without_ committing the transaction

const deleteParent = `delete from meadowlark.documents where document_id = '${parentId}';`;

await clientOne.query('begin');

await clientOne.query(deleteParent);

// And now in a separate client, try to insert a doc that references the parent

const referenceCheck = `

select id from meadowlark.documents where document_id = '${parentId}';

`;

const insertReference = `

insert into meadowlark.documents (document_id, document_identity, project_name, resource_name, resource_version, is_descriptor, validated, edfi_doc)

values ('${referencingDocumentId}', '{}', 'edfi', 'test', '3.3b', False, True, '{}');

insert into meadowlark.references (parent_document_id, referenced_document_id)

values ('${parentId}','${referencingDocumentId}');`;

try {

await clientTwo.query('begin');

const res = await clientTwo.query(referenceCheck);

if (res.rows.length === 0) {

console.info('no record found! an API would return 400 due to missing parent.');

exit();

}

await clientTwo.query(insertReference);

await clientTwo.query('commit');

} catch (error) {

console.info('unexpected failure on initial insert of reference');

console.error(error);

}

// That didn't fail! Try now using the SELECT ... FOR UPDATE approach. "nowait" is essential here; if you remove it, then

// clientTwo will wait for clientOne to finish.

const selectForUpdate = `

select id from meadowlark.documents where document_id = '${parentId}' for share nowait;

insert into meadowlark.documents (document_id, document_identity, project_name, resource_name, resource_version, is_descriptor, validated, edfi_doc)

values ('${referencingDocumentId}', '{}', 'edfi', 'test', '3.3b', False, True, '{}');

insert into meadowlark.references (parent_document_id, referenced_document_id)

values ('${parentId}','${referencingDocumentId}');`;

try {

await clientTwo.query('begin');

await clientTwo.query(selectForUpdate);

} catch (error) {

console.info('EXPECTED failure on second insert reference');

console.info(error);

}

// Close these clients in reverse order

clientOne.query('commit');

clientTwo.query('commit');

// Cleanup

clientOne.end();

clientTwo.end();

}

RunTest().finally(() => console.log('Done'));

MongoDB

MongoDB supports ACID transactions but does not have native lock-on-read support. However, there is a common pattern used to simulate this by updating documents using a randomly-generated lock field. See the MongoDB blog post How to SELECT...FOR UPDATE Inside MongoDB Transactions for details.

By implementing the lock field pattern, MongoDB transactions will fail with a WriteConflict if, for example, a document read in one transaction is deleted in another. These transactions can then be retried or reported back to the client as a conflict, as appropriate.

The following unit test code demonstrates the desired behavior:

Downstream Data Storage

Downstream data stores - including OpenSearch and filesystem ("data lake" - could theoretically become out of sink in this event-driven architecture:

- Network error

- Bug in the event handler code

- Faulty downstream service.

Further research is needed on patterns for detecting and correcting these situations.

This is technically an eventual consistency problem, but it could have the same effect as a referential integrity error.

Table of Contents

- No labels