| icon | false |

|---|---|

| title | Migration Utility v1.1.0 |

One script per table in each directory, where possible

Scripts are named in the format:

#### TableName [optional_tags].sqlThis convention does not apply to operations that are performed dynamically

Troubleshooting, Timeout prevention

Custom, unknown extensions on the ODS are common. As part of the process of upgrading a highly-customized ODS, an installer is likely to run into a sql exception somewhere in the middle of upgrade (usually caused by a foreign key dependency, schema bound view, etc).

In general, we do not want to attempt to modify an unknown/custom extension on an installer's behalf to try and prevent this from happening. It is important that a installer be aware of each and every change applied to their custom tables. Migration of custom extensions will be handled by the installer.

Considering the above, in the event an exception does occur during upgrade, we want to make the troubleshooting process as easy as possible. If an exception is thrown, an installer should immediately be able to tell:

- Which table was being upgraded when it occurred (from the file name)

- What were the major changes being applied (from the file tags)

- What went wrong (from the exception message)

- Where to find the code that caused it

Many issues may be fixable from the above information alone. If more detail is needed, the installer can view the code in the referenced script file. By separating script changes by table, we make an effort to ensure that there are only a few lines to look though (rather than hundreds)

In addition, each script will be executed in a separate transaction. Operations such as index creation can take a long time on some tables with a large ODS. Splitting the code into separate transactions helps prevent unexpected timeout events

The major downside of this approach is the large number of files it can produce. For example, the v2x to v3 upgrade was a case where all existing tables saw modifications. This convention generates a change script for every table in more than one directory.

With updates becoming more frequent in the future, future versions should not be impacted as heavily.

Most change logic is held in sql scripts (as of V3)

As of v3: Most of the upgrade logic is performed from the SQL scripts, rather than using .NET based upgrade utility to write database changes directly

As of v3, most upgrade tasks are simple enough where they can be executed straight from SQL (given a few stored procedures to get started).

Given this advantage, effort was made to ensure that each part of the migration tool (console utility, library, integration tests) could be replaced individually as needed

The current upgrade utility contains a library making use of DbUp to drive the upgrade process. In the future, if/when this tool no longer suits our needs, we should be able to take existing scripting and port it over to an alternative upgrade tool (such as RoundhousE), or even a custom built tool if the need ever arises.

This convention could (and should) change in the future if upgrade requirements become too complex to execute from SQL scripting alone.

Two types of data validation/testing options

- Dynamic, SQL based

- Ensures data that is expected to remain the same does not change

- Can run on any ODS in the field even if the data is unknown

- Integration tests

- Runs on a known, given set of inputs

- Used to test logic in areas where changes should occur

Prevent data loss

The first type of validation, (dynamic, sql based) is executed on on data that we know should not ever change during the upgrade.

- The source and destination tables do not need to be the same. This validation type is most commonly used to verify that data was correctly moved to the expected destination table during upgrade

- Can be executed on any field ODS to ensure that unknown datasets do not cause unexpected data loss during upgrade

- The data in these tables does not need to be known

The second type of data validation, integration test based, is used to test the logic and transformations where we know the data should change:

- For example, during the

v2xtov3upgrade, descriptor namespaces are converted from the2.x "http://EdOrg/Descriptor/Name.xml"format to the3.x"uri://EdOrg/Name"format. Integration tests are created to ensure that the upgrade logic is functioning correctly for several known inputs

Together, the two validation types (validation of data that changes, and validation of data that does not change) can be used to create test coverage wherever it is needed for a given ODS upgrade.

The the dynamic validation is performed via reusable stored procedures that are already created and available during upgrade.

See scripts in the "*Source Validation Check" and "*Destination Validation Check" directories for example usages.

Upgrade Issue Resolution Approach

It is common to encounter scenarios where data cannot be upgraded directly from one major version to another due to schema changes. A common example is that a primary key change causes records allowable by the previous version schema to be considered duplicates on the upgrade version schema and therefore not allowed.

The general approaches included here are a result of collaboration with the community on how to resolve these common situations, and are documented here as a reference for utility developers to apply in their own work.

- Approach 1: Make non-breaking, safe changes to data on the user's behalf. This is the preferred option when practical and safe to do so.

- Example: Duplicate session names in the v2.x schema that will break the new v3.x primary key will get a term name appended during upgrade to meet schema requirements.

- Consider logging a warning for the user to review to inform them that a minor change has taken place, and mention the log file in documentation.

- Approach 2: Throw a compatibility exception asking the user to intervene with updates. Used when we are unable to safely make assumptions on the user's behalf to perform the update for them

- See the troubleshooting section of this document for an example of this

- The returned user-facing message should explain what updates are required, and very briefly include the reason why if it is not immediately obvious.

- Compatibility exceptions should be thrown before proceeding with core upgrade tasks.

- We want to make sure that we are not asking someone to make updates to a database in an intermediate upgrade state.

- Each message class has a corresponding error code, mirrored in code by an enumeration

- This is done so that specific compatibility scenarios can be covered by integration tests where needed

- Approach 3: Back up deprecated objects that cannot be upgraded into another schema before dropping them. Last resort option that should be mainly restricted to deprecated items that are no longer have a place in the new model

- As a general approach, it is preferred to avoid dropping data items without a way to recover them in case of disaster. The user may choose to delete the backup if they desire.

- Avoid this option for tables that exist in both models but simply cannot be upgraded. Should this (hopefully rare) situation occur, consider the option throwing a compatibility exception instead and ask the user back up/empty the table before proceeding.

Usage Walkthrough

This section explains how to upgrade an existing v2.x ODS / API to version 3.4. The ODS v3.4 database update includes the latest enhancements based on feedback from the Ed-Fi Community.

The steps can be summarized as:

| Table of Contents | ||||||

|---|---|---|---|---|---|---|

|

A compatibility reference chart, a command-line parameter list, and a troubleshooting guide are included. Each upgrade step is outlined in detail below.

Step 1. Read the Ed-Fi ODS v3.4 Upgrade Overview

Before you get started, you should review and understand the information in this section.

Target Audience

These instructions have been created for technical professionals, including software developers and database administrators. The reader should be comfortable with performing the following types of tasks:

- Creating and managing SQL Server database backups

- Performing direct SQL commands and queries

- Execution of a command-line based tool that will perform direct database modifications

- Creating a configuration file for upgrade (.csv format)

- Writing custom database migration scripts (Extended ODS only)

General Notes

- Your v2.x of the Ed-Fi ODS / API will checked for compatibility automatically during the migration process. If changes are needed, you will be prompted at the command line by the migration utility. A summary of commonly encountered compatibility conditions has been included in this section for reference.

- The new v3.4 schema contains upgrades to the structure of primary keys on several tables. In most instances, these new uniqueness requirements will be resolved automatically for you with no action required.

- There are some areas where new identities cannot be generated automatically on your behalf during upgrade. These tables will need to be updated manually.

Compatibility Conditions

This section describes compatibility conditions (i.e., requirements that may need intervention for the compatibility tool to function properly) and suggested remediation.

Development Overview: The Basics

The table below describes files and folders used by the Migration Utility along with a description and purpose for each resource.

Script Directory:

\Scripts

- Users writing custom upgrade scripts

- Maintainers of the upgrade utility

- All database upgrade scripts go here, including:

- Ed-Fi upgrade scripts.

- Custom upgrade scripts (user extensions).

- Compatibility checks.

- Dynamic / SQL-based validation.

- Subdirectories contain code for each supported ODS upgrade version.

Directory:

\Descriptors

- Maintainers of the upgrade utility only

- Ed-Fi-Standard XML files containing Descriptors for each ODS version.

- These XML files are imported directly by the scripting in the

Utilities\Migration\Scriptsdirectory above.

Library/Console:

EdFi.Ods.Utilities.Migration

(console application created via dotnet publish)

- Maintainers of the upgrade utility only

- Small, reusable library making use of DbUp to drive the main upgrade.

- Executes the SQL scripts contained in the

Utilities\Migration\Scriptsdirectory above. - Takes a configuration object as input, and chooses the appropriate scripts to execute based on ODS version and current conventions.

Test Project:

EdFi.Ods.Utilities.Migration.Tests

- Maintainers of the upgrade utility only (does not contain test coverage for extensions)

- Contains integration tests that perform a few test upgrades and assert that the output is as expected.

- Like the console utility, makes direct use of the

EdFi.Ods.Utilities.Migrationlibrary described above.

Development Troubleshooting

This section outlines general troubleshooting procedures.

Compatibility Errors

- Before the schema is updated, the ODS data is checked for compatibility. If changes are required, the upgrade will stop and exception will be thrown with instructions on how to proceed.

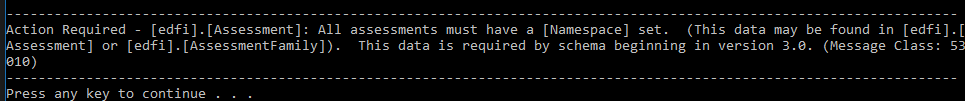

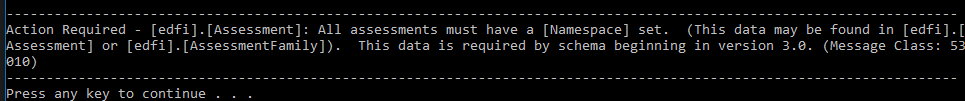

An example error message follows:

- After making the required changes (or writing custom scripts), simply launch the upgrade utility again. The upgrade will proceed where it left off and retry with the last script that failed.

Other Exceptions During Development

- Similar to compatibility error events, the upgrade will halt and an exception message will be generated during development if a problem is encountered.

- After making updates to the script that failed, simply re-launch the update tool. The upgrade will proceed where it left off starting with the last script that failed.

- If you are testing a version that is not yet released, or if you need to re-execute scripts that were renamed or modified during active development: restore your database from backup and start over.

- Similar to other database migration tools, a log of scripts successfully executed will be stored in the default journal table. DbUp's default location is

[dbo].[SchemaVersions]. - A log file containing errors/warnings from the most recent run may be found by default in

C:\ProgramData\Ed-Fi-ODS-Migration\Migration.log.

Additional Troubleshooting

- The step-by-step usage guide below contains runtime troubleshooting information.

Design/Convention Overview

The table below outlines some important conventions in the as-shipped Migration Utility code.

In-place upgrade

Database upgrades are performed in place rather than creating a new database copy

Extensions

- Preserve all unknown data in extension tables

- Ensure errors and exceptions are properly generated and brought to the upgrader's attention during migration if the upgrade conflicts with an extension or any other customization

As a secondary concern, this upgrade method was chosen to ease the upgrade process for a Cloud-based ODS (e.g., on Azure).

edfi schema) will be notified, and must explicitly acknowledge it by adding the BypassExtensionValidationCheck option at the command line when upgrading. This will ensure that the installer is aware that custom upgrade scripts may be requiredSequence of events that occur during upgrade

Specifics differ for each version, but in general the upgrade sequence executes as follows

- Validate user input at the command line

- Create tempdata (tables/stored procedures) that will be used for upgrade

- Check current ODS data for upgrade compatibility, and display action messages to the user if existing data requires changes

- Before modifying the

edfischema: calculate and store hash values for primary key data that expected NOT to change during upgrade - Drop views, constraints, stored procs

- Import descriptor data from XML

- Create all new tables for this version that did not previously exist

- Update data in existing tables

- Drop old tables

- Create views/constraints/stored procs for the new version

- Validation check: recalculate the hash codes generated previously, and make sure that all data that is not supposed to change was not mistakenly modified

- Drop all temporary migration data

Minimize the number of scripts with complex dependencies on other scripts in the same directory/upgrade step.

- Compatibility checks are designed to run before any changes to the

edfischema have been made. This prevents the user from having to deal with a half-upgraded database while making updates - Initial hash codes used for data validation also must be generated before touching the

edfischema to ensure accuracy.- It is also better for performance to do this step while all of our indexes are still present

- Dropping of constraints, views, etc is taken care of before making any schema changes to prevent unexpected sql exceptions

- New descriptors are imported as an initial step before making changes to the core tables. This ensures that all new descriptor data is available in advance for reference during updates

- After creation of descriptors, the sequence of the next steps is designed to ensure that all data sources exist unmodified on the old schema where we expect it to.

- Create tables that are brand new to the schema only (and populate them with existing data)

- Modify existing tables (add/drop columns, etc)

- Drop old tables no longer needed

- Foreign keys, constraints, etc are all added back in once the new table structure is fully in place.

- Once the

edfischema is fully upgraded and will receive no further changes, we can perform the final data validation check.

The v2x to v3 upgrade is a good example case to demonstrate the upgrade steps working together due its larger scale:

- For

v2xtov3: All foreign keys and other constraints were dropped during this upgrade in order to adopt the new naming conventions - Also for

v2xtov3: ODS types were replaced with new descriptors. This change impacted nearly every table on the existing schema

Development Overview: The Basics

The table below describes files and folders used by the Migration Utility along with a description and purpose for each resource.

| Overview Item | Needed by Whom? | Brief Description & Purpose |

|---|---|---|

Script Directory: |

|

|

Directory: |

|

|

Library/Console: (console application created via dotnet publish) |

|

|

Test Project: |

|

|

Development Troubleshooting

This section outlines general troubleshooting procedures.

Compatibility Errors

- Before the schema is updated, the ODS data is checked for compatibility. If changes are required, the upgrade will stop and exception will be thrown with instructions on how to proceed.

An example error message follows:

- After making the required changes (or writing custom scripts), simply launch the upgrade utility again. The upgrade will proceed where it left off and retry with the last script that failed.

Other Exceptions During Development

- Similar to compatibility error events, the upgrade will halt and an exception message will be generated during development if a problem is encountered.

- After making updates to the script that failed, simply re-launch the update tool. The upgrade will proceed where it left off starting with the last script that failed.

- If you are testing a version that is not yet released, or if you need to re-execute scripts that were renamed or modified during active development: restore your database from backup and start over.

- Similar to other database migration tools, a log of scripts successfully executed will be stored in the default journal table. DbUp's default location is

[dbo].[SchemaVersions]. - A log file containing errors/warnings from the most recent run may be found by default in

"{YourInstallFolder}\.store\edfi.suite3.ods.utilities.migration\{YourMigrationUtilityVersion}\edfi.suite3.ods.utilities.migration\{YourMigrationUtilityVersion}\tools\netcoreapp3.1\any\Ed-Fi-Migration.log".

Additional Troubleshooting

- The step-by-step usage guide below contains runtime troubleshooting information.

Design/Convention Overview

The table below outlines some important conventions in the as-shipped Migration Utility code.

| What | Why | Optional Notes |

|---|---|---|

In-place upgrade Database upgrades are performed in place rather than creating a new database copy | Extensions

As a secondary concern, this upgrade method was chosen to ease the upgrade process for a Cloud-based ODS (e.g., on Azure). |

|

Sequence of events that occur during upgrade Specifics differ for each version, but in general the upgrade sequence executes as follows

| Minimize the number of scripts with complex dependencies on other scripts in the same directory/upgrade step.

| The suite 2 to suite 3 upgrade is a good example case to demonstrate the upgrade steps working together due its larger scale:

|

One script per table in each directory, where possible Scripts are named in the format: #### TableName [optional_tags].sqlThis convention does not apply to operations that are performed dynamically | Troubleshooting, Timeout prevention Custom, unknown extensions on the ODS are common. As part of the process of upgrading a highly-customized ODS, an installer is likely to run into a sql exception somewhere in the middle of upgrade (usually caused by a foreign key dependency, schema bound view, etc). In general, we do not want to attempt to modify an unknown/custom extension on an installer's behalf to try and prevent this from happening. It is important that a installer be aware of each and every change applied to their custom tables. Migration of custom extensions will be handled by the installer. Considering the above, in the event an exception does occur during upgrade, we want to make the troubleshooting process as easy as possible. If an exception is thrown, an installer should immediately be able to tell:

Many issues may be fixable from the above information alone. If more detail is needed, the installer can view the code in the referenced script file. By separating script changes by table, we make an effort to ensure that there are only a few lines to look though (rather than hundreds) In addition, each script will be executed in a separate transaction. Operations such as index creation can take a long time on some tables with a large ODS. Splitting the code into separate transactions helps prevent unexpected timeout events | The major downside of this approach is the large number of files it can produce. For example, the suite 2 to suite 3 upgrade was a case where all existing tables saw modifications. This convention generates a change script for every table in more than one directory. With updates becoming more frequent in the future, future versions should not be impacted as heavily. |

Most change logic is held in sql scripts (as of V3) As of | As of Given this advantage, effort was made to ensure that each part of the migration tool (console utility, library, integration tests) could be replaced individually as needed The current upgrade utility contains a library making use of DbUp to drive the upgrade process. In the future, if/when this tool no longer suits our needs, we should be able to take existing scripting and port it over to an alternative upgrade tool (such as RoundhousE), or even a custom built tool if the need ever arises. This convention could (and should) change in the future if upgrade requirements become too complex to execute from SQL scripting alone. | |

Two types of data validation/testing options

| Prevent data loss The first type of validation, (dynamic, sql based) is executed on on data that we know should not ever change during the upgrade.

The second type of data validation, integration test based, is used to test the logic and transformations where we know the data should change:

Together, the two validation types (validation of data that changes, and validation of data that does not change) can be used to create test coverage wherever it is needed for a given ODS upgrade. | The the dynamic validation is performed via reusable stored procedures that are already created and available during upgrade. See scripts in the "* |

Compatibility Conditions

This section describes compatibility conditions (i.e., requirements that may need intervention for the compatibility tool to function properly) and suggested remediation.

| Expand | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| |||||||||||||||

|

| Expand | ||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ||||||||||||||||||||||

| ||||||||||||||||||||||

| Expand | ||||||||||||||||||||||

| ||||||||||||||||||||||

|

Other Compatibility Conditions

There are several other less common items not included above. The migration utility will check for these items automatically and provide guidance messages as needed. For additional technical details, please consult the Troubleshooting Guide below.

Step 2. Install Required Tools

- Ensure that you have an instance of the Ed-Fi ODS / API running locally that has been set up following the instructions in /wiki/spaces/ODSAPI33/pages/26443830.

|

Other Compatibility Conditions

There are several other less common items not included above. The migration utility will check for these items automatically and provide guidance messages as needed. For additional technical details, please consult the TroubleshootingGuide below.

Ed-Fi ODS Migration Tool Parameter Reference

| Parameter | Description | Example | Required? |

|---|---|---|---|

--Database | Database Connection String | --Database "Data Source=YOUR\SQLSERVER;Initial Catalog=Your_EdFi_Ods_Database;Integrated Security=True" | Yes |

--Engine | Database Engine Type (SQLServer or PostgreSQL) | --Engine PostgreSQL | No (Defaults to SQLServer) |

--DescriptorNamespace | Descriptor Namespace prefix to be used for new and upgraded descriptors. Namespace must be provided in suite 3 |

format as follows: Valid characters for an education organization name: alphanumeric and Script Usage: Provided string value will be escaped and substituted directly in applicable sql where the | --DescriptorNamespace "uri://ed-fi.org" | Yes |

--CredentialNamespace | Namespace prefix to be used for all new Credential records. Namespace must be provided in suite 3 |

format as follows: Valid characters for an education organization name: alphanumeric and Script Usage: Provided string value will be escaped and substituted directly in applicable SQL where the | --CredentialNamespace "uri://ed-fi.org" | Yes, if table edfi.StaffCredential has data Optional if table edfi.StaffCredential is empty | |

--CalendarConfigFilePath | Path to calendar configuration, which must be accessible from your sql server. Script Usage: Provided string value will be substituted directly in dynamic SQL where the | --CalendarConfigFilePath "C:\PATH\TO\YOUR\CALENDAR_CONFIG.csv" | Single-Year ODS: No (unless prompted by the upgrade tool) Multi-Year ODS: Yes |

--DescriptorXMLDirectoryPath | Path to directory containing 3.1 descriptors for import, which must be accessible from your sql server Script Usage: Provided string value will be substituted directly in dynamic SQL where the | --DescriptorXMLDirectoryPath "C:\PATH\TO\YOUR\DESCRIPTOR\XML" | Local Upgrade: No (applicable to most cases) Remote Upgrade: Yes Used if the Descriptor XML directory has been moved to a different location accessible to your sql server |

--BypassExtensionValidationCheck | Permits the migration tool to make changes if extensions or external schema dependencies have been found | --BypassExtensionValidationCheck | Extended ODS: Yes This includes any dataset with an extension schema or foreign keys pointing to the Ed-Fi schema. Others: No |

--CompatibilityCheckOnly | Perform a dry run for testing only to check ODS compatibility without making additional changes. The database will not be upgraded. | --CompatibilityCheckOnly | No. This is an optional feature. |

--Timeout | SQL command query timeout, in seconds. | --Timeout 1200 | No. (Useful mainly for development and testing purposes) |

--ScriptPath | Path to the location of the SQL scripts to apply for upgrade, if they have been moved | --ScriptPath "C:\PATH\TO\YOUR\MIGRATION_SCRIPTS" | No. (Only needed if scripts have been moved from the default location. Useful mainly for development and testing purposes) |

| --FromVersion | The ODS/API version you are starting from. | --FromVersion "3.1.1" | No. Migration utility will detect your staring point. |

| --ToVersion | The ODS/API version that you would like upgrade to. | --ToVersion "5.3 |

| " | No. Migration utility will take you to the latest version by default. |

| Anchor | ||||

|---|---|---|---|---|

|

Usage Troubleshooting Guide

The below section provides additional guidance for many common compatibility issues that can be encountered during the upgrade process.

| Error received during upgrade | Explanation | How to fix it | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

Action Required: Unable to proceed with migration because the BypassExtensionValidationCheck option is disabled ... | An external dependency on the edfi schema has been found. As a courtesy, the migration tool will not proceed with the upgrade process without your permission. Common Examples:

Why: This notification is intended to bring extension items to your attention that will require manual handling. All primary keys and indexes on the | After reviewing the data and dependencies on your extension tables, add the Please review Step 8. Write Custom Migration Scripts for Extensions (above) before proceeding. | |||||||||||

| Action Required: edfi.StaffCredential ... | The column StateOfIssueStateAbbreviationTypeId must be non-null for all records. This value will become part of a new primary key on the 3.1 schema. | Add a [StateOfIssueStateAbbreviationTypeId] for all records in [edfi].[StaffCredential]. This is the abbreviation for the name of the state (within the United States) or extra-state jurisdiction in which a license or credential was issued.

| |||||||||||

Action Required - An EducationOrganizationId must be resolvable for every student in the following table(s) for compatibility with the upgraded schema starting in version 3.0: (Provided list of tables includes one or more of the following):

| The upgrade utility must be able to locate an [EducationOrganizationId] for every student with data in the listed tables to proceed. Beginning in v3.1, the schema structure now requires that these student information items be defined separately for each associated EducationOrganization rather than simply linking them to a student. | The easiest way to meet this requirement is to ensure that every student has a corresponding record in [edfi].[StudentSchoolAssociation] or [edfi].[StudentEducationOrganizationAssociation]. The upgrade tool will use this information to handle the rest of the data conversion tasks for you. | |||||||||||

| Action Required: edfi.Assessment ... | All assessments must have a [Namespace] set. (This data may be found in [edfi].[Assessment] or [edfi].[AssessmentFamily]). In v3.xsuite 3, the schema required that this column be non-null. | Add a [Namespace] for all assessment records.

| |||||||||||

| Action Required: edfi.OpenStaffPosition ... | There may be no two duplicate This is uniqueness if required for the upgraded primary key on this table. | Update the RequisitionNumber values on edfi.OpenStaffPosition. Ensure that the same value is not used twice for the the same education organization.

| |||||||||||

| Action Required: edfi.RestraintEvent ... | There may be no two duplicate This is uniqueness if required for the upgraded primary key on this table. | Update the

| |||||||||||

| Action Required: edfi.GradingPeriod ... | There may be no two duplicate PeriodSequence values for the same school during the same grading period.Additionally, if prompted by the upgrade tool, all This compatibility requirement is a result of a primary key change between 2.x and v3 Old v2.0 Primarysuite 2 and suite 3

| Ensure that there are no two records with the same SchoolId, GradingPeriodDescriptorId, PeriodSequence, and SchoolYear.

(Requirements may be less strict than noted in the above query for some some multi-year Calendars. See the compatibility check script in the 01 Bootstrap directory for exact technical requirements.) | |||||||||||

| Action Required: edfi.DisciplineActionDisciplineIncident ... | Every record in [edfi].[DisciplineActionDisciplineIncident] must have a corresponding record in [edfi].[StudentDisciplineIncidentAssociation] with the same [StudentUSI], [SchoolId], and [IncidentIdentifier]. The V3 schema no longer allowed discipline action records with students that are not associated with the discipline incident. A foreign key will be added to the new schema enforcing this. |

| |||||||||||

Calendar configuration file error - various similar messages may appear that mention a table name and a list of school ids. Example error:Found {#} date ranges in [edfi].[ | The calendar configuration file contains the start date and end date for each school year. To support the new calendar features in V3, the migration tool uses this configuration file to assign a SchoolYear to all CalendarDate related entries in the database. There are several variations of this type of error which all have a similar meaning. The migration tool found date records in the specified table that could not be assigned a school year based on the BeginDate and EndDate information provided. | Either the calendar configuration file will need to be edited, or data in the specified table will need to be modified.

Example:

| |||||||||||

SqlException: or similar: The object '{OBJECT_NAME_HERE}' is dependent on column '{COLUMN_NAME_HERE}'. | This type of unhandled SQL exception occurs when the migration process tries to alter an item that is being referenced by an external object, such as a foreign key on another table, or schema-bound view. Common causes

| Make sure that you have dropped ALL foreign keys and views from other schemas that have a dependency on the You do not need to drop any constraints on the edfi schema itself: This is handled automatically for you. For tips on locating foreign key dependencies quickly, see the query in Step 8b. above. | |||||||||||

| A data validation failure was encountered on destination object {table name here} ... | Data was modified in a location that was not expected to change. A validation check from script directory Scripts/02 Setup/{version}/## Source Validation Check has failed. | This state is triggered if certain records are modified in the middle of migration that the upgrade utility expected would remain unchanged. The upgrade will be halted for you as a precaution to prevent unintended data loss. Common Causes:

If you are testing potential updates to the 2.x ODS suite 2 ODS data by hand (data only / no custom scripts):

If you are writing your own custom scripts:

If your v2.x suite 2 Ed-Fi ODS schema is unmodified, and you are not making edits to the current scripting or data, this error should not occur during normal operation.

|